I am actively seeking a PhD position in Robotics&CV in the USA for Fall 2026!

I am a Master's student at Georgia Institute of Technology (since Fall 2024), in the Robot Learning and Reasoning (RL2) Lab advised by Prof. Danfei Xu. I'm also closely working with Prof. Harish Ravichandar.

Previously, I earned my B.Eng. from University of Electronic Science and Technology of China (2020–2024), where I was a member of the Data Intelligence Group advised by Prof. Wen Li.

I also had the privilege of working with Prof. Junsong Yuan during my internship at the University at Buffalo.

My research lies at the intersection of robotics and computer vision:

• Robot Learning from Human Video: Learning robot skills by imitation learning using passive human videos, finally extending to web-scale internet videos.

• Dexterous Manipulation: Learning human-level dexterity with combination of imitation learning and reinforcement learning. Especially for challenging humanoid bimanual dexterous manipulation tasks.

• Robot Learning Systems: Developing full-stack universal structure to learn from multi-modality human data, with co-design from hardware to algorithm optimization.

Outside research, I enjoy basketball, badminton, music (classical & saxophone), and traveling. I'm a fan of LeBron James, Max Verstappen, and Cristiano Ronaldo.

Education

Georgia Institute of Technology

Aug 2024 - June 2026 (Expected)

Master of Science in Robotics (College of Computing)

University of Electronic Science and Technology of China

Sep 2020 - June 2024

Bachelor of Engineering in Artificial Intelligence (College of Computer Science)

Publications & Preprints

* Equal contribution † Corresponding author

Robotics

EgoEngine: From Egocentric Human Videos to High-Fidelity Dexterous Robot Demonstrations

Shuo Cheng*, Yangcen Liu*, Yiran Yang, Xinchen Yin, Woo Chul Shin, Mengying Lin, Danfei Xu†

Coming Soon! /

Project Page

EgoEngine is a powerful data engine that converts egocentric human videos into robot-executable demonstrations.

By coupling an action branch (retargeting with refinforcement learning refinement) and a visual branch (arm-hand inpainting with robot

rendering blending) under the same task, EgoEngine minimizes action and visual gaps to yield scalable, executable robot data.

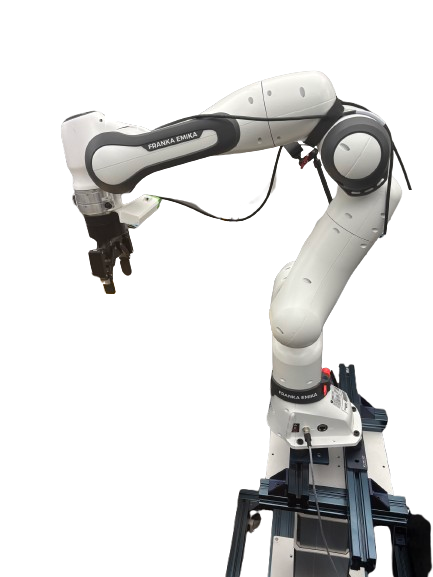

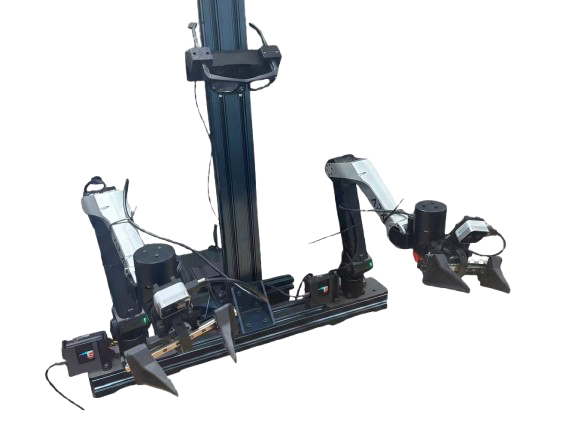

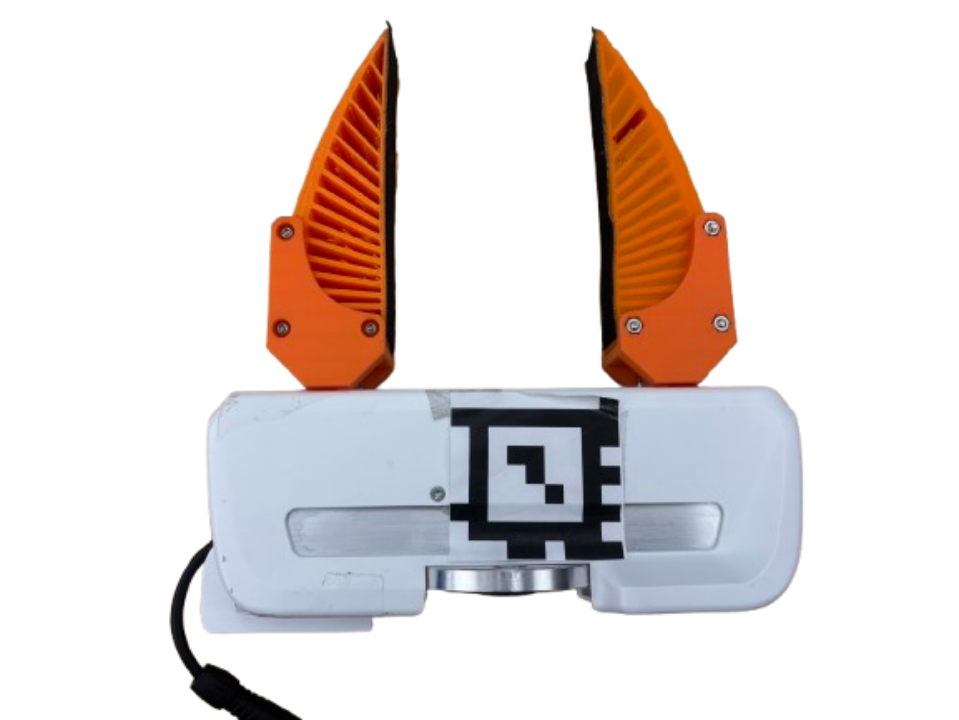

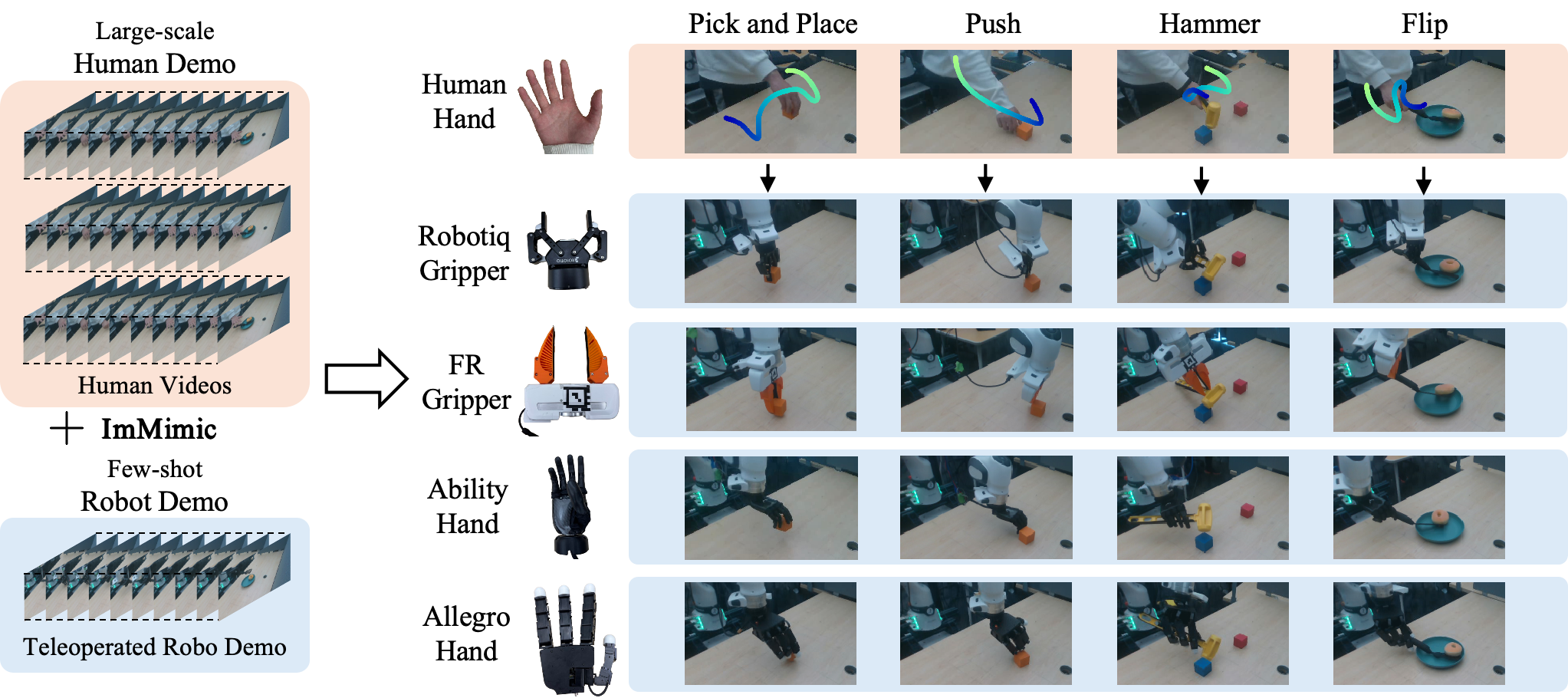

ImMimic: Cross-Domain Imitation from Human Videos via Mapping and Interpolation

Yangcen Liu*, Woochul Shin*, Yunhai Han, Zhenyang Chen, Harish Ravichandar, Danfei Xu†

CoRL 2025 🏆 Oral,

RSS 2025 Dex WS 🏆 Spotlight

CoRL 2025 H2R WS

/

Project Page /

Paper /

Talk 01:24:00

ImMimic is an embodiment-agnostic co-training framework designed to bridge the domain gap between

large-scale human videos and limited robot demonstrations. By leveraging DTW for human-to-robot mapping and MixUp-based interpolation

for domain adaptation, ImMimic enhances task performance across all embodiments.

Video Processing

Bridge the Gap: From Weak to Full Supervision for Temporal Action Localization with PseudoFormer

Ziyi Liu†, Yangcen Liu (First Student Author, Primary Contributor)

CVPR 2025 /

Paper

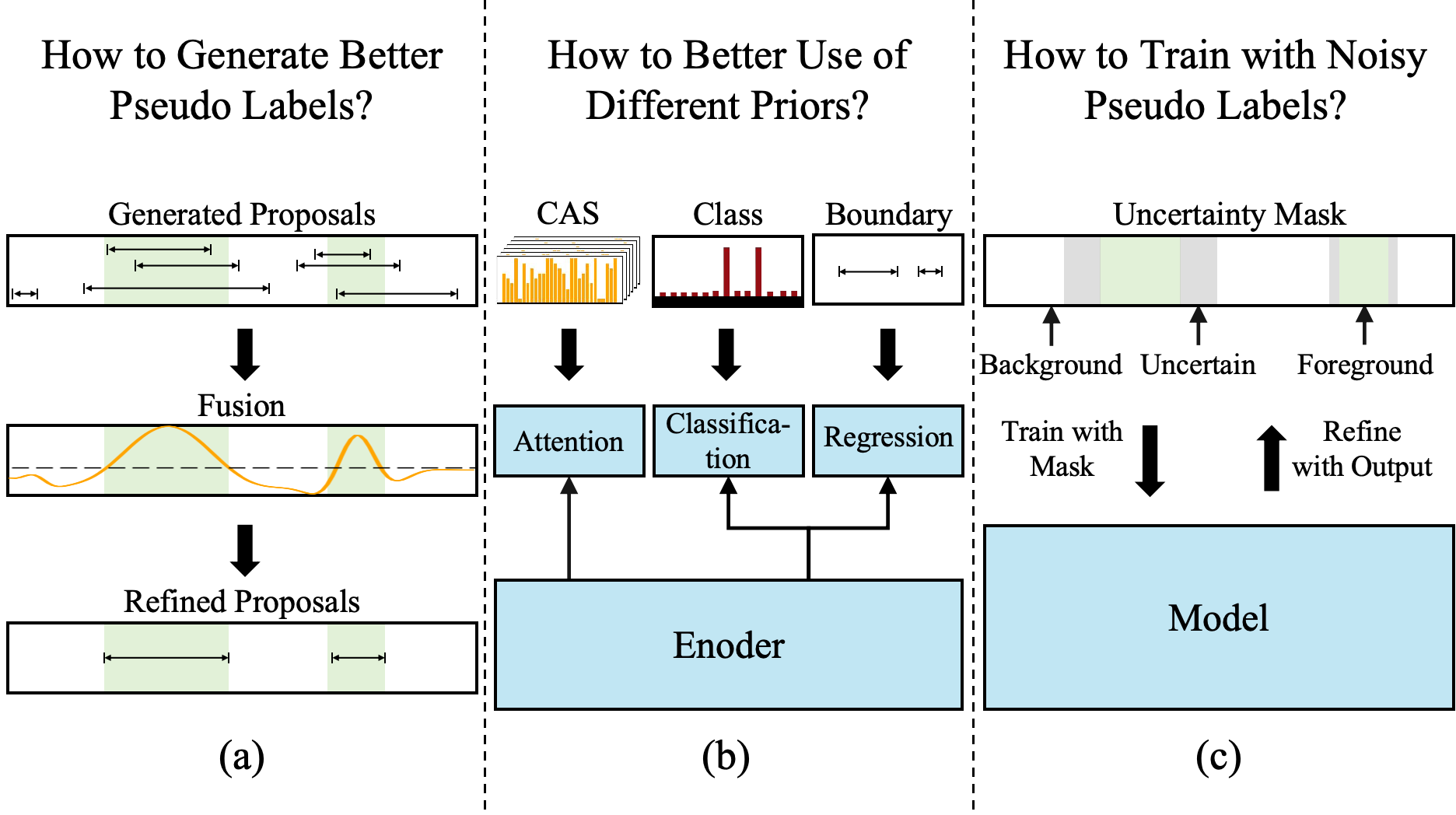

PseudoFormer is a two-branch framework for temporal action localization that bridges the gap between weakly- and fully-supervised learning. It generates high-quality

pseudo labels via cross-branch fusion and leverages them to train a full supervision branch, achieving state-of-the-art performance on THUMOS14 and ActivityNet1.3.

STAT: Towards Generalizable Temporal Action Localization

Yangcen Liu, Ziyi Liu†, Yuanhao Zhai, Wen Li, David Doerman, Junsong Yuan

Arxiv /

Paper

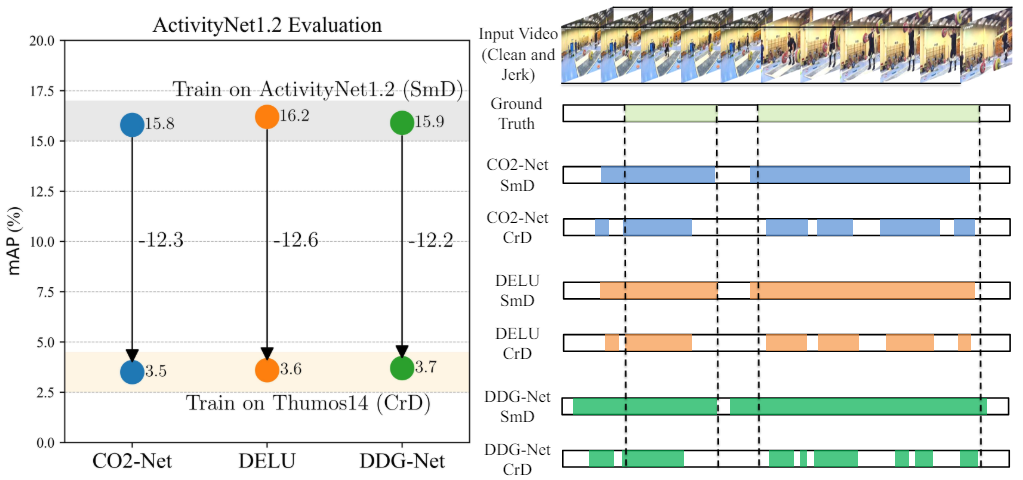

STAT is a self-supervised teacher-student framework for weakly-supervised temporal action localization, designed to improve

generalization across diverse distributions. It is able to adapt to varying

action durations, achieving strong cross-dataset performance on THUMOS14, ActivityNet1.2, and HACS.

Simultaneous Detection and Interaction Reasoning for Object-Centric Action Recognition

Xunsong Li, Pengzhan Sun, Yangcen Liu, Lixin Duan, Wen Li†

TMM 2025 /

Paper

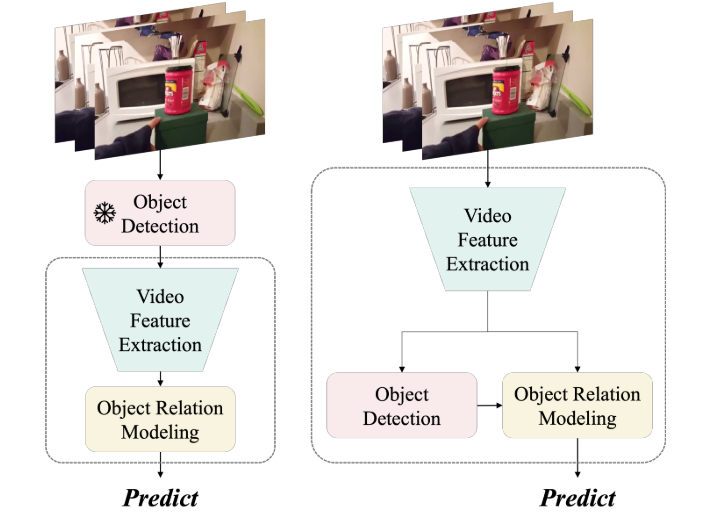

DAIR is an end-to-end framework for object-centric action recognition that jointly detects relevant objects

and reasons about their interactions. It integrates object decoding, interaction refinement, and relational

modeling without relying on a pre-trained detector, achieving strong results on Something-Else and IKEA-Assembly.

AIGC

Structure-Aware Human Body Reshaping with Adaptive Affinity-Graph Network

Yangcen Liu*, Qiwen Deng*, Wen Li, Guoqing Wang†

WACV 2025 /

Paper

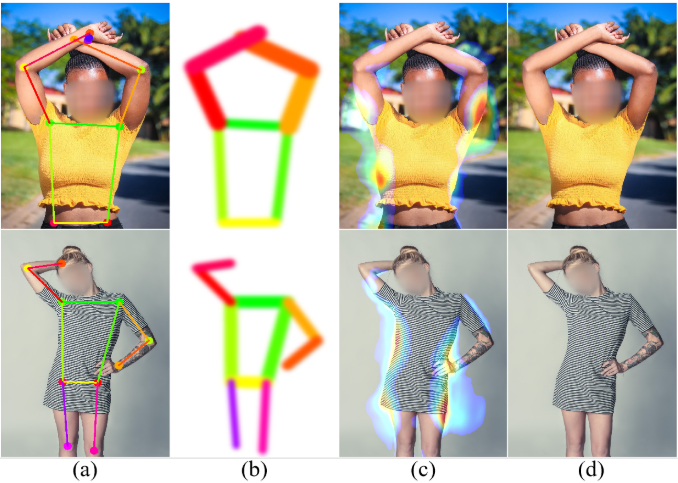

AAGN is a structure-aware framework for automatic human body reshaping in portraits that leverages an Adaptive Affinity-Graph

block to model global relationships between body parts and a Body Shape Discriminator guided by an SRM filter to ensure

high-frequency detail preservation. Trained on BR-5K, it generates coherent and aesthetically improved reshaping results.

Experience

Research Assistant, Georgia Institute of Technology

Aug 2024 -- Now

Lab: Robot Learning and Reasoning Lab

Supervisor: Prof. Danfei Xu

AIGC Algorithm Researcher Intern, RED

Jul 2023 -- Sep 2023Worked for Red Note (Xiaohongshu), is a social media and e-commerce platform with 300M+ active users (up to 2024)

Research Assistant, University at Buffalo (SUNY)

Mar 2023 -- Dec 2023

Lab: Visual Computing Lab

Supervisor: Prof. Junsong Yuan

Collaborated with Prof. Ziyi Liu, Yuanhao Zhai and Prof.

David Doermann

Research Assistant, University of Electronic Science and Technology of China

Mar 2022 -- Feb 2024

Lab: Data Intelligence Group

Supervisor: Prof. Wen Li

Collaborated with Prof. Lixin Duan, Prof. Shuhang Gu and Xunsong Li

Teaching

Teaching Assistant, Georgia Institute of Technology

Aug 2025 -- Dec 2025

Course: Deep Learning (CS 4644/7643) Fall 2025

Supervisor: Prof. Danfei Xu

Teaching Assistant, Georgia Institute of Technology

Jan 2025 -- May 2025

Course: Deep Learning (OMSCS 4644/7643) Spring 2025

Supervisor: Prof. Kira Szolt

Service

CVPR 2026, ICRA 2026, CoRL 2025, MM 2024, MM 2023.